Because the unlock of ChatGPT ultimate yr, I’ve heard some model of the similar factor again and again: What’s going on? The frenzy of chatbots and unending “AI-powered” apps has made starkly transparent that this generation is poised to upend the whole thing—or, a minimum of, one thing. But even the AI mavens are suffering with a dizzying feeling that for all of the communicate of its transformative possible, such a lot about this generation is veiled in secrecy.

It isn’t only a feeling. Increasingly of this generation, as soon as advanced via open analysis, has turn into nearly utterly hidden inside companies which are opaque about what their AI fashions are able to and the way they’re made. Transparency isn’t legally required, and the secrecy is inflicting issues: Previous this yr, The Atlantic printed that Meta and others had used just about 200,000 books to coach their AI fashions with out the repayment or consent of the authors.

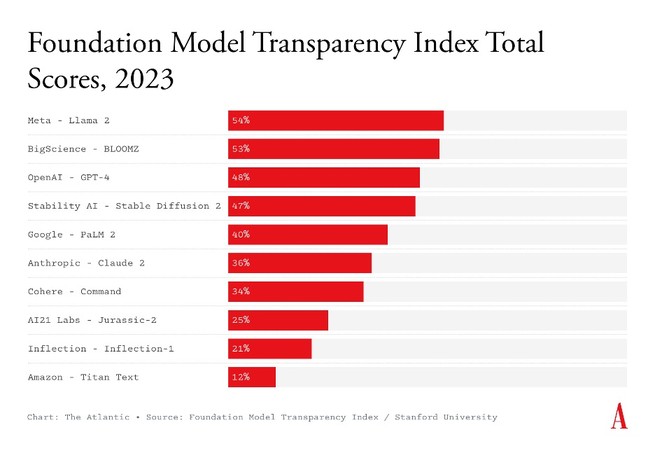

Now we’ve a approach to measure simply how unhealthy AI’s secrecy downside in fact is. The previous day, Stanford College’s Middle for Analysis on Foundational Fashions introduced a brand new index that tracks the transparency of 10 primary AI corporations, together with OpenAI, Google, and Anthropic. The researchers graded every corporate’s flagship style in line with whether or not its builders publicly disclosed 100 other items of data—comparable to what knowledge it was once skilled on, the wages paid to the knowledge and content-moderation staff who have been fascinated by its building, and when the style will have to no longer be used. One level was once awarded for every disclosure. Some of the 10 corporations, the highest-scoring slightly were given greater than 50 out of the 100 conceivable issues; the common is 37. Each corporate, in different phrases, will get a convincing F.

Take OpenAI, which was once named to signify a dedication to transparency. Its flagship style, GPT-4, scored a 48—shedding vital issues for no longer revealing data comparable to the knowledge that have been fed into it, the way it handled individually identifiable data that can were captured in mentioned scraped knowledge, and what kind of power was once used to provide the style. Even Meta, which has prided itself on openness via permitting folks to obtain and adapt its style, scored simplest 54 issues. “A approach to take into accounts it’s: You might be getting baked cake, and you’ll be able to upload decorations or layers to that cake,” says Deborah Raji, an AI duty researcher at UC Berkeley who wasn’t concerned within the analysis. “However you don’t get the recipe ebook for what’s in fact within the cake.”

Many corporations, together with OpenAI and Anthropic, have held that they preserve such data secret for aggressive causes or to forestall dangerous proliferation in their generation, or each. I reached out to the ten corporations listed via the Stanford researchers. An Amazon spokesperson mentioned the corporate seems to be ahead to scrupulously reviewing the index. Margaret Mitchell, a researcher and leader ethics scientist at Hugging Face, mentioned the index misrepresented BLOOMZ because the company’s style; it was once if truth be told produced via a world analysis collaboration referred to as the BigScience challenge that was once co-organized via the corporate. (The Stanford researchers recognize this within the frame of the record. Because of this, I marked BLOOMZ as a BigScience style, no longer a Hugging Face one, at the chart above.) OpenAI and Cohere declined a request for remark. Not one of the different corporations replied.

The Stanford researchers decided on the 100 standards in line with years of current AI analysis and coverage paintings, that specialize in inputs into every style, info concerning the style itself, and the general product’s downstream affects. For instance, the index references scholarly and journalistic investigations into the deficient pay for knowledge staff who assist best possible AI fashions to provide an explanation for its decision that the firms will have to specify whether or not they without delay make use of the employees and any exertions protections they installed position. The lead creators of the index, Rishi Bommasani and Kevin Klyman, advised me they attempted to bear in mind the varieties of disclosures that will be maximum useful to a spread of various teams: scientists carrying out unbiased analysis about those fashions, coverage makers designing AI legislation, and shoppers deciding whether or not to make use of a style in a selected scenario.

Along with insights about particular fashions, the index finds industry-wide gaps of data. No longer a unmarried style the researchers assessed supplies details about whether or not the knowledge it was once skilled on had copyright protections or different laws proscribing their use. Nor do any fashions reveal enough details about the authors, artists, and others whose works have been scraped and used for coaching. Maximum corporations also are tight-lipped concerning the shortcomings in their fashions, whether or not their embedded biases or how continuously they make issues up.

That each and every corporate plays so poorly is an indictment at the {industry} as a complete. If truth be told, Amba Kak, the chief director of the AI Now Institute, advised me that during her view, the index was once no longer top sufficient of a normal. The opacity throughout the {industry} is so pervasive and ingrained, she advised me, that even 100 standards don’t absolutely expose the issues. And transparency isn’t an esoteric fear: With out complete disclosures from corporations, Raji advised me, “it’s a one-sided narrative. And it’s nearly all the time the positive narrative.”

In 2019, Raji co-authored a paper appearing that a number of facial-recognition merchandise, together with ones being offered to the police, labored poorly on ladies and folks of colour. The analysis make clear the chance of legislation enforcement the usage of inaccurate generation. As of August, there were six reported instances of police falsely accusing folks of against the law within the U.S. in line with fallacious facial reputation; the entire accused are Black. Those newest AI fashions pose identical dangers, Raji mentioned. With out giving coverage makers or unbiased researchers the proof they want to audit and again up company claims, AI corporations can simply inflate their functions in ways in which lead shoppers or third-party app builders to make use of inaccurate or insufficient generation in the most important contexts comparable to felony justice and well being care.

There were uncommon exceptions to the industry-wide opacity. One style no longer incorporated within the index is BLOOM, which was once in a similar way produced via the BigScience challenge (however isn’t the same as BLOOMZ). The researchers for BLOOM carried out one of the vital few analyses to be had thus far of the wider environmental affects of large-scale AI fashions and likewise documented details about knowledge creators, copyright, individually identifiable data, and supply licenses for the educational knowledge. It presentations that such transparency is conceivable. However converting {industry} norms would require regulatory mandates, Kak advised me. “We can’t depend on researchers and the general public to be piecing in combination this map” of data, she mentioned.

In all probability the most important clincher is that around the board, the tracker reveals that the entire corporations have in particular abysmal disclosures in “affect” standards, which contains the selection of customers who use their product, the programs being constructed on best of the generation, and the geographic distribution of the place those applied sciences are being deployed. This makes it way more tough for regulators to trace every company’s sphere of regulate and affect, and to hang them responsible. It’s a lot more difficult for shoppers as smartly: If OpenAI generation helps your child’s instructor, aiding your circle of relatives physician, and powering your place of business productiveness gear, you won’t even know. In different phrases, we all know so little about those applied sciences we’re coming to depend on that we will’t even say how a lot we depend on them.

Secrecy, in fact, is not anything new in Silicon Valley. Just about a decade in the past, the tech and criminal pupil Frank Pasquale coined the word black-box society to consult with the way in which tech platforms have been rising ever extra opaque as they solidified their dominance in folks’s lives. “Secrecy is coming near vital mass, and we’re at the hours of darkness about the most important choices,” he wrote. And but, in spite of the litany of cautionary stories from different AI applied sciences and social media, many of us have grown pleased with black bins. Silicon Valley spent years setting up a brand new and opaque norm; now it’s simply permitted as part of lifestyles.